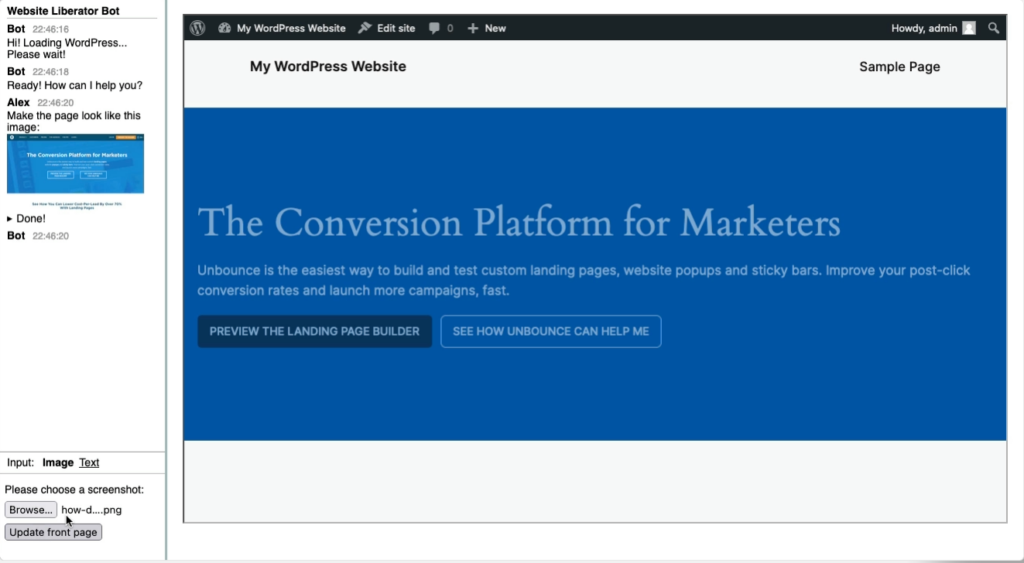

I’ve written about my cll tool before and it is still my go-to way of communicating with LLMs. See the Github repo. As a developer, having llms available in the Terminal is very helpful to me.

Write a file to disk

A lot of my prompts ask the LLM to create a file for me. This is often a fast starting point for working on something new. It makes me realize how much time it takes to start from zero and even if the LLM doesn’t get all details right on first try, it gives me a boost.

But with this also comes that I need to do a lot of copy-pasting from LLM output. So, if you already anticipate that you’ll receive a file, you can set the -f option and it will write the suggested file contents to disk:

![cll -t please write me a wordpress php plugin that will log all insert and update calls to a custom post type

Model: gpt-40-mini via OpenAI

System prompt: When recommending file content it must be prepended with the proposed filename in the form: "File: filename.ext"

> please write me a wordpress php plugin that will log all insert and update calls to a custom post type

File: log-custom-post-type.php

*"php

<?php

/**|

* Plugin Name: Custom Post Type Logger

* Description: Logs all insert and update calls to a specified custom post type.

* Version: 1.0

* Author: Your Name

*/

[...]|

// Hook into the save_post action.

add _action( 'save_post',

'log_custom_post_type_changes', 10, 2 );

Instructions:

1. Replace 'your_custom_post_type' with the actual name of your custom post type.

2. Save the code into a file named log-custom-post-type.php.

3. Upload the file to your WordPress installation's wp-content/plugins directory.

4. Activate the plugin through the WordPress admin interface.

5. All insert and update actions for the specified custom post type will be logged to a file named custom-post-type-log. txt in the same di rectory as the plugin file. Adjust the logging method as needed for your environment or logging preferences.

Writing 1248 bytes to file: log-custom-post-type.php](https://alex.kirk.at/wp-content/uploads/sites/2/2024/08/cll-write-to-file.png)

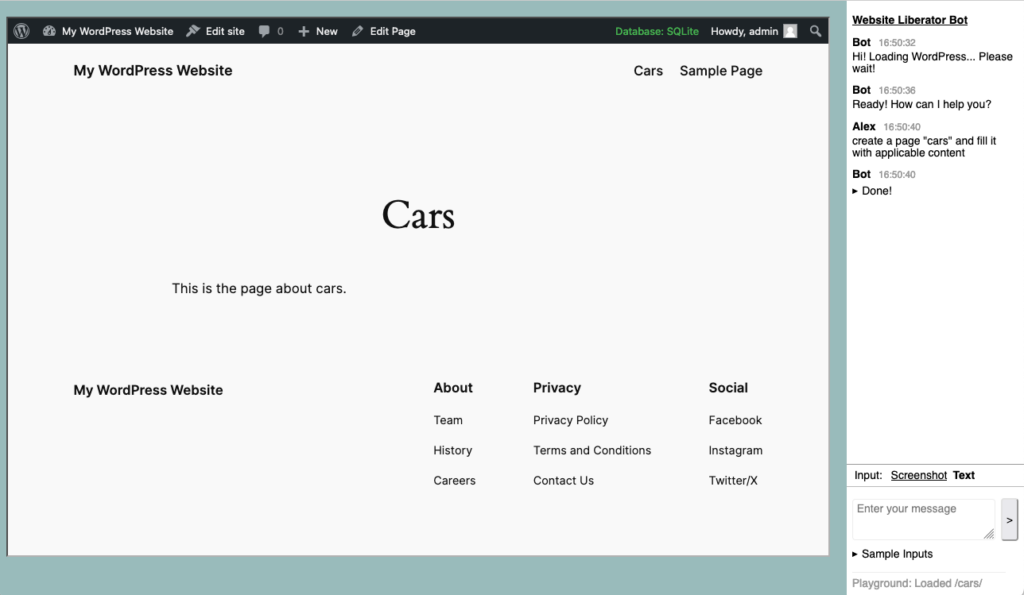

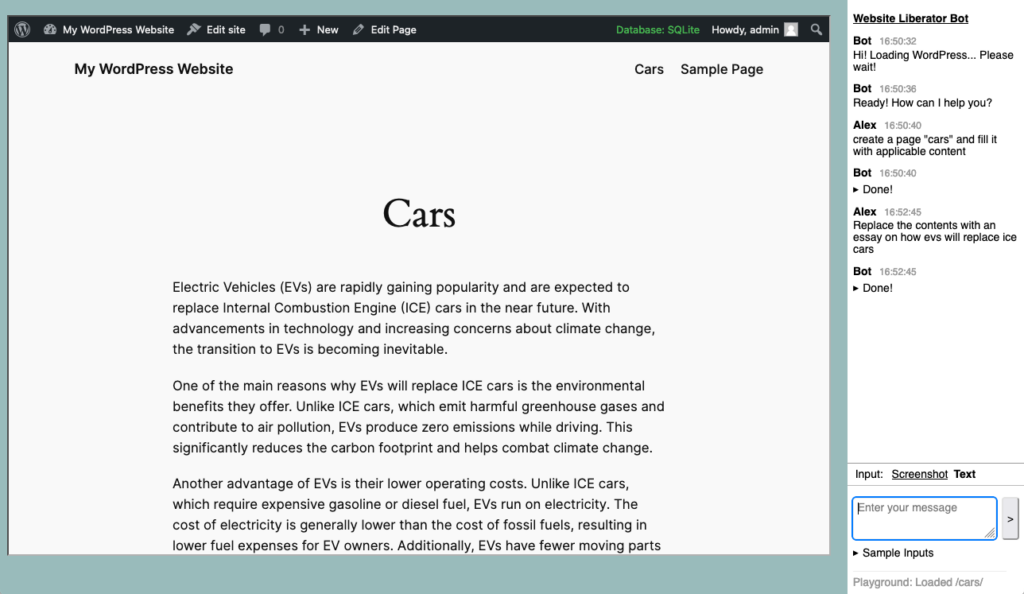

Modify a file (or multiple)

Further conversation would update the file from above but you can also start with an existing file. I had previously modified cll to handle pipe input well but it can be very useful to just give it a local file using the -i parameter and if you combine it with -f it will automatically update the file as well.

![cll -i log-custom-post-type.php -f modify this so that the insert statements are stored in a custom post type

Model: gpt-40-mini via OpenAI

System prompt: When recommending file content it must be prepended with the proposed filename in the form: "File: filename.ext"

> modify this so that the insert statements are stored in a custom post type

Local File: log-custom-post-type.php 1248 bytes:

* Plugin Name: custom Post lype Logger.

* Description: Logs all insert and update calls to a specified custom post type.

* Version: 1.01

Add file content to the prompt? Ly/NJ: y

File: 100-custom-post-type.php

pho

* Plugin Name: Custom Post Type Logger

* Description: Logs all insert and update calls to a specified custom post type.

* Version: 1.0|

* Author: Your Name|

*/|

[...]|

add action init, register Log entry cor h

Backing up existing Tile: 10g-custom-post-type.onp = Log-custo-post-type.ono.oak.1/24005124

Writing 2459 bytes to file: 10g-custom-post-type.php

→ log-custom-post-type.php.bak. 1724665121](https://alex.kirk.at/wp-content/uploads/sites/2/2024/08/cll-modify-local-files.png)

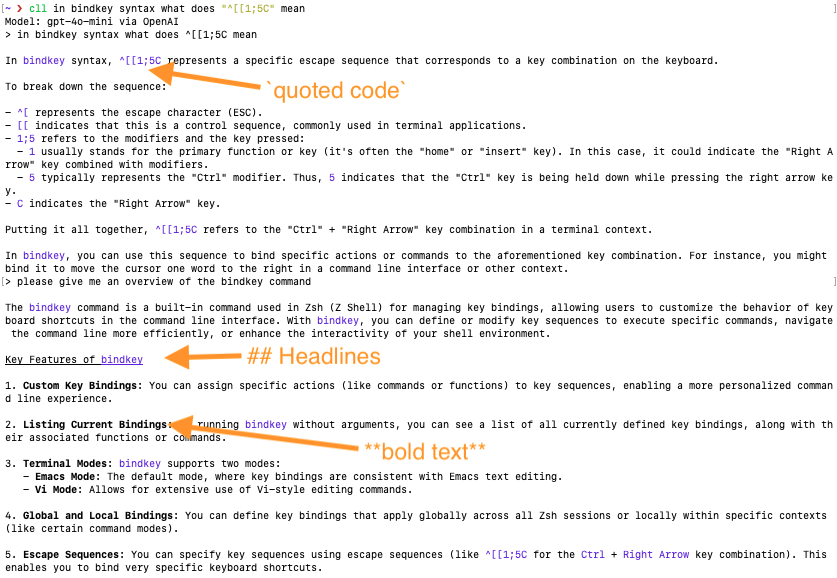

Output Formatting

LLM output is often Markdown-like with headlines, bold text, inline code and code blocks. This now prints more nicely:

These little additions keep cll useful for me. I know that it’s the typical engineer’s “I’ll roll my own” but like that it automatically falls back to Ollama locally if there is no network, has the nice output formatting, can work with files, and is always quickly available in the Terminal. You can use it, or get inspired for what you’d ask from a CLI LLM client. Checkout the Github repo at https://github.com/akirk/cll