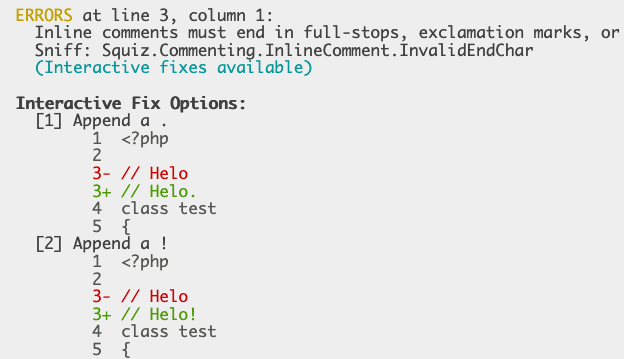

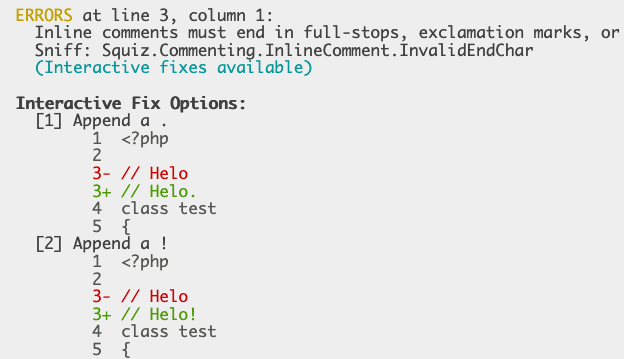

It can be very time consuming to resolve issues detected by PHP Code Sniffer. An interactive mode for phpcbf could make things much faster!

It can be very time consuming to resolve issues detected by PHP Code Sniffer. An interactive mode for phpcbf could make things much faster!

I have updated my Playground Step Library (which I had written about before)–the tool that allows you to use more advanced steps in WordPress Playground–so that it can now also be used programmatically: It is now an npm package: playground-step-library. Behind the scenes this actually dominoed into migrating it to TypeScript and restructuring the code…